How To Build White-label AI Voice Agents? [Complete Guide]

Building an AI voice agent isn’t just about adding speech to your product – it’s about creating an engaging, human-like experience that keeps users coming back.

AI voice agents are getting closer to delivering human-like experience with natural fluency and learned speech behaviors. Either as a service or a solution, AI voice agents are gaining traction among businesses. Most of them have already launched their own agents and see a 15% increase in customer retention.

With AI agents upgrading to be hyper-realistic and achieving low-latency, the market is expanding at almost 34% YoY.

There are multiple ways to build an AI voice agent. In this guide, you’ll learn how to build voice agents that listen, reason, and respond naturally during a real-time voice call.

What a Voice AI Agent is: Understand the core concept, components, and technologies (STT, NLP, NLU, TTS) that make voice agents work. Why Build a Custom Voice Agent: Learn how personalization, custom voices, and brand-aligned experiences improve user engagement and retention. Step-by-Step Guide: Discover the exact process to build a custom AI voice agent using MirrorFly SDK, from project setup to deployment. Design & UX Principles: Learn how to make agents sound natural, look human-like, and create immersive user experiences without triggering the uncanny valley effect. Challenges & Solutions: Explore the common technical, ethical, and design challenges in voice AI development and how MirrorFly AI Voice agent solution helps overcome them.

Table of Contents

What is a Voice AI Agent?

A voice AI agent is an intelligent software system that uses artificial intelligence technologies to understand, interpret and respond to people during a conversation. They use speech recognition and natural language processing (NLP) to interact in a natural, human-like tone and voice over phone or other voice-based channels.

Why Build a Custom AI Voice Agent?

The key reason why most businesses go on to build their own custom AI agent is – the experience they aim to provide to their customers. Brands that do not just want any AI agent to do the job, focus on creating highly interactive, personalized and engaging experiences to their users.

Here is a more in-depth discussion on why one would build custom agents for voice assistance:

2.1 Personalization and Immersion

The first impression of a good voice agent is the voice. You need to customize the interface as much as possible to create a personalized TTS voice for your customers.

One best example for this customization is ‘Project Viva’

The goal of Project Viva is to create a virtual reality campus guide for Bryn Mawr College. Nadine Adnane, the developer of Project Viva and former computer science student at Bryn Mawr College felt it would be fitting and more personalized if the agent was voiced by an actual student at the college. So, she voiced for the agent.

- Adnane used Microsoft Azure’s Cognitive Services to build her “Custom Voice Font”

- She utilized Carnegie Mellon University’s (CMU) Arctic transcript file, which contains 1132 carefully selected sentences, as the text to read for her recordings

- The recordings were made using a Blue Yeti Blackout USB microphone

As a result, her project was able to connect its users to agents more effectively and increased the overall immersion. The agent Viva was able to deliver favorable responses.

Custom voices that are warm, fast and supportive give agents a personalized personality and identity, making it comfortable and seamless for users to connect with the agent.

2.2 Enhanced User Experience and Engagement

Besides custom voices, you also have the options to personalize the visual humanoid representations of the agent.

What does visual humanoid representation include?

It typically is the avatar, talking head or figurines with realistic lip-syncing. These personalized agents with their humanized voices efficiently draw people closer to them.

This in turn increases the dynamics and participatory interactions when compared to the traditional digital interface.

These agents not only focus on their functional goals. They extend above and beyond to create enjoyable experiences to users with human-like delivery and playful responses like making jokes or singing songs. This helps users connect with the agents on their desired persona.

2.3 Control over Voice Characteristics

When you build your own AI voice agent, you’ll have greater control over verbal and paraverbal elements of the agent. These elements include:

- Verbal elements (linguistic content): diction (word choice), syntax (sentence structure), and semantics (meaning).

By customizing these elements, you can enable your agent to emphasize on a context, maintain the flow and have clarity on the subject matter of the conversation.

- Paraverbal elements (how the message is delivered): pitch, volume, speech rate, pauses, pronunciation, articulation, timbre, and breathiness. These cues convey emotion, tone, attitude, and communicative intent of the agent.

Building your own AI voice agent gives you the freedom to dynamically adjust these parameters to match the context of the conversation.

For example, you can configure the agent to slow down when conversing with children, or modulate the pitch when the user’s voice sounds frustrated.

2.4 Addressing Limitations of Pre-made Voice Agents

There are over 150+ popular companies building pre-made voice agents. There is no doubt these TTS voice agents exhibit high-quality performance. But they often lack the customization required for unique experience.

Not many agents allow businesses to use customized voices generated from an external source or vendor. This limits the ability of the brand to deliver a personalized experience to their customers. Oftentimes, the voices available in the pre-made agents either sound too robotic or do not match the persona of the brand.

In these cases, building your own custom AI voice agent lets you overcome these personalization challenges by enabling voice imports just as you prefer.

Overall, building your own voice agents gives you all the tools to craft truly unique, context-specific and deeply engaging interactive experiences to your users. Honestly, generic voice agents cannot replicate what your custom voice agents could deliver to your customers – be it response context or the style and personality.

06 Steps To Build AI Voice Agent With MirrorFly

In this section, we will discuss the 6 crucial stages of developing a custom AI voice agent. Our goal is to develop an autonomous voice agent that can interact with users in human-like conversations and emotionally resonate with them

Most AI voice services help you build self-contained AI agents. These services focus only on building apps, agents for dictation, kiosk or voice assistants like Alexa and Siri.But, if your business needs an AI agent that should handle real-time voice calls, meetings and in-app chatbots, you need to go beyond TTS services. That’s where you’ll need MirrorFly.

Here are the elaborated steps to build an AI voice agent with MirrorFly:

Step 1: Conceptualisation and Project Setup

When you start building your AI voice agent, the first step is to identify the purpose of your agent.

- Why are you building this system?

- What are its functional goals for your business?

Once you know them clearly, you’ll start with setting up your project on the technical front.

1. Define Core Components:

When the user starts speaking to the agent, the mic in the user’s device captures the audio. Your agent needs a Speech Recognition technology to consume this audio input.

Once it takes in the input, the Natural Language Processing (NLP) technology and Natural Language Understanding (NLU) helps the agent understand the context of the audio. Next, the agent starts preparing its response using LLMs or pre-fed transcripts and delivers through the Response generation system.This response in the text format is converted to speech using Speech Synthesis technology. The UI generates the visual output on the device screen and audio output through the speakers

In systems where you need real-time communication, an SDK like MirrorFly helps get the speech data from conversations for STT and NLP processes.

2. Set up Development Environment:

Once you are ready with the core technologies, you’ll need to decide where to build the AI agent.

Here are some recommendations:

| Platform | Development Environment | Languages | AI/ML Frameworks |

|---|---|---|---|

| Android | Android Studio | Java, Kotlin | TensorFlow Lite, ML Kit, Dialogflow |

| iOS | Xcode | Swift, Objective-C | Core ML, TensorFlow Lite, Create ML |

| Web | Any code editor / VS Code | JavaScript, TypeScript | TensorFlow.js, Node.js, WebAssembly |

3. Manage Dependencies

For real-time communication, MirrorFly takes care of your libraries. For AI services and libraries, you’ll need libraries for each technology.

Here are some recommendations:

- STT: Whisper, Google Speech or Azure

- NLP: Dialogflow, Rasa or GPT-based APIs

- TTS: Google, Amazon Polly or Elevenlabs.

Note:

When you build your AI agent with MirrorFly, the solution includes these AI libraries/ services. Still, if you prefer a specific vendor, you can discuss your requirements with the team and they’ll customize it for you.

Step 2: Custom Text-to-Speech (TTS) Voice Creation

No one prefers an agent that sounds robotic. People expect an immersive experience when they converse with AI systems. This custom voice not only makes the agent unique, but helps users connect with it seamlessly.

Now, how to generate a custom voice for your business?

Use one of these services:

Note:

📌 Custom TTS voice creation services:

- Microsoft Azure Custom Neural Voice

- Amazon Polly Neural TTS (with Brand Voice)

- Google Cloud Text-to-Speech Custom Voice

- IBM Watson Text to Speech Custom Voice

- Speechify Custom Voice

- Nuance Vocalizer Custom Voice

- Acapela Custom Voice

- CereProc Custom Voice

- ReadSpeaker VoiceLab

- iSpeech Custom Voice

Pick one of these services, and feed in your text transcripts. The service will generate a custom voice font curated for your business. The output is generally delivered as an URL endpoint. You’ll get this endpoint and integrate into your AI agent system to activate the voice font.

How to prepare your text transcripts?

The internet has a huge collection of open-source corpus sentences that are carefully selected for speech synthesis.

Note:

📌 Source materials you can use:

- CMU Arctic Speech Corpus

- LJSpeech Dataset

- VCTK Corpus

- LibriTTS

- Blizzard Challenge Datasets

- M-AILABS Speech Dataset

- OpenSLR Datasets (e.g., SLR12, SLR13)

- TED-LIUM Corpus

- TIMIT Acoustic-Phonetic Continuous Speech Corpus

- Mozilla Common Voice

Check the data requirements of the TTS voice creation service you pick.

Some common rules include:

- Padding filenames with zeros

- Original: file1.wav → Padded: file0001.wav

- Removing punctuation

- Original sentence: “Hello, world!” → Cleaned: Hello world

- Adding delimiters between filenames and sentences

- Format with tab: file0001.wav\\tHello world

- Keeping sentences short

- Original long sentence: The quick brown fox jumps over the lazy dog while the sun sets.

- Shortened: The quick brown fox jumps over the lazy dog.

If the transcript meets the specific requirements of the service, select it and start recording in the voice you need.

In the background, your TTS service will start mapping your audio files with the text in the transcript.

What are the recording conditions?

Make sure you use a high-quality microphone to record your transcripts.

Note:

📌 Microphone recommendations for HQ TTS voice recording:

- Audio-Technica AT2020

- Shure SM7B

- Rode NT1-A

- Neumann TLM 103

- AKG C214

- Shure MV7

- Blue Yeti Pro

- Rode NT-USB

- Samson Q2U

- HyperX FlipCast

Once you choose a recording device, you’ll need to configure it.

Since you are recording for TTS, you’ll need to choose a specific polar pattern on your device. You can pick one from the below mentioned:

- Cardiod: picks up sound primarily from the front. Reject the sounds from sides and rears.

- Supercardiod: tighter front focus than Cardiod. Small rear pickup.

- Hypercardioid: even narrower front focus. A bit more sensitive at the rear than supercardioid.

These cardioid configurations play a critical role in picking up the voice from the source person carefully, rejecting background noise.

Make sure you record your samples in a spread out schedule to avoid vocal fatigue.

Once your equipment is set up, use your device’s recording service or apps like Audacity to start the process.

At this stage, the voice recording for your AI agent gets done.

How will you train the agent with the recording?

Start uploading the audio files + transcripts to your TTS voice creation service.

Once you have the data, you can start training your agent in 3 different ways:

| Training Method | Typical Dataset Size / Samples Needed | Notes |

|---|---|---|

| Statistical Parametric Synthesis | 30 – 300 minutes (~hundreds of utterances) | Works with limited recordings, lower naturalness |

| Concatenative Synthesis | 60 – 120+ hours (~6,000+ sentences) | High-quality, requires large, segmented dataset |

| Neural / Deep Learning-based Synthesis | 5 – 20+ hours (more improves quality) | Produces natural, expressive voice; benefits from diverse recordings |

Although you use the best equipment and advanced technologies, custom voices may still sound a bit robotic or have issues in pronunciation.

Don’t be surprised if your agent pronounces the name “Marion” as “Mary-on”. It’s common, but you can have an eye on a few important quality factors and improve them.

| Factor | Metric / Measure | How to Improve |

|---|---|---|

| Signal-to-Noise Ratio (SNR) | dB (higher is better) | Use quiet, sound-proof recording environment; high-quality microphone |

| Pronunciation Accuracy | Phoneme Error Rate (PER), Word Error Rate (WER) | Provide clear, consistent recordings; annotate tricky words; use pronunciation dictionaries |

| Intelligibility / Clarity | Character Error Rate (CER), Mean Opinion Score (MOS) | Short, clear sentences; normalize volume; avoid overlapping speech |

| Prosody / Expressiveness | F0 variance, stress/duration modeling errors | Include diverse emotional/intonation samples; proper sentence phrasing |

| Timing / Duration Accuracy | Duration deviation (ms or % vs. reference) | Ensure consistent speaking rate; avoid rushed or overly long clips |

| Artifact Presence | Signal-to-Artifact Ratio (SAR), MOS | Use high-quality recordings; remove clicks, pops, or background noise |

| Consistency | Statistical variance in pitch, loudness, timbre | Maintain same recording setup; use same microphone and distance |

| Overall Naturalness | Mean Opinion Score (MOS, scale 1–5) | Large, diverse dataset; neural TTS models; post-processing like smoothing |

| Emotional Conveyance | MOS for emotion accuracy | Record expressive samples; label emotional context |

| Word Boundary Accuracy | Alignment error in phonemes or words | Clear articulation; use TTS-friendly scripts; review mispronounced words |

Step 3: Speech Recognition and Natural Language Understanding (NLU)

The above steps take care of training the agent for contextually-relevant and natural-sounding responses.

Now, let’s see how to help your agent understand what users say during a voice call.

To recognize and understand speech in a generic AI system, a common TTS voice creation service like Microsoft Azure is sufficient. But for scenarios like video or voice calls, you’ll need a real-time communication system that comprises speech recognition, NLU and NLP to capture and process the conversation data.

MirrorFly is a secure AI-powered CPaaS solution that bridges this gap. The voice SDK captures real-time audio events and sends the voice data to Automated Speech Recognition (ASR) providers like Azure Speech-to-text, Google Speech or Whisper.

During this process, MirrorFly ensures high-level streaming encoding and transport, helping your agent system understand the exact context of the conversation.

Additionally, MirrorFly takes care of the security of the complete voice data transmission, with flexibility to add your own custom privacy layers.

The data captured by the ASR providers is sent to NLU platforms like Dialogflow or Rasa for intent detection. Here, your chosen platform performs entity extraction.

Now, your platform may have multiple user conversations, and sometimes it hosts group conversations with an agent. How does your agent know which conversational data belong to a unique user?

In this case, MirrorFly uses unique Session IDs, Room IDs or User IDs to tie the NLU results back to specific participants. For example, when you have 2 users in the same voice room, your agent listens to each speaker stream separately, runs ASR + NLU and responds intelligently.

1. Speech Synthesis (Voice Output)

Whether it is Speech-to-text conversion or Text-to-speech conversion, a TTS/ STT provider is capable of generating the output locally, on the system where the conversion is happening.

In this voice call scenario, MirrorFly’s voice SDK pushes back the synthesized audio stream back into the MirrorFly session. This is how participants in the call hear the responses.

Clearly, MirrorFly APIs inject the audio files/ streams into live conversations, so the AI voice agents speak inside the call room.

Remember, MirrorFly doesn’t replace ASR/ NLU/ TTS. Rather, it just enhances the overall process pipeline by handling real-time communication.

MirrorFly SDK + Your AI Stack = AI agent for real-time communication

Step 4: Embodiment and User Interface (UI) Design

Before we make our recommendations, I’d like to discuss a popular phenomenon called the “Uncanny Valley”.

It refers to a feeling of unease, eerie or discomfort when people encounter non-human entities appearing similar, but not-like humans.

This was a common repulsive response from humans when the roboticists adopted to anthropomorphism giving AI systems human-like attritions.

When both physical and software-based AI systems falling under the uncanny valley did not earn the liking of a bigger population, developers moved to semi-realistic representations.

To give agents an immersive look and feel, business adopted to 4 different ways with minimalistic UI:

- Profile photo of the AI agent’s avatar

- Logo of the brands

- Talking head – with only head portion of the agent

- Full character design (2D/3D)

For the first 2 options, you will not need much effort. Your inhouse design teams can get things done. But for building a talking head or a full character design, you’ll need software like Synthesia, Heygen or Veed that makes the task simpler and quicker.Using these tools, you can customize any look of your AI agent like hair, skintone, outfits, or eye color.

Once you create your character model or animation, make sure to copyright them. This prevents commercial use or redistribution of your AI agent avatars by unauthorized parties.

1. UI Recommendations:

A voice agent may not deliberately require an avatar. Still, it is good to have it set right, so users looking at their screen during the conversation will have a truly immersive experience.

Your user conversation might be at stake if your AI agent just has one stern face and looks lifeless. With advancements in UI for AI, you can build agents that look and sound, close to a real-life human. This increases the time your users converse on a call.

Here are some recommendations:

- Variety: Create a variety of animations for each feeling – happy, sad, curious, interesting, and frustrated. Have layers of animations, so you agents can empathize with your user’s context and respond with appropriate facial response.

- Eye Contact: You can use an Eyecontroller class to configure your agent to look like it is making eye contact with the user. This class can constantly update the gaze and also override other animations.

- Lip Syncing: You can implement a ‘ventriloquist-like’ effect or ‘shape keys’ to manipulate how the agent’s jaw or lip-bone must move for each vowel or consonant.

- Splash Screen: Include a custom splash screen in the opening of a conversation to give users a professional feel.

- Menus: Add a settings pane that gives control to users over agent voice volume, background changes, sound effects and conversation topics.

- Visual enhancements: Add post-processing effects and customize lightings to make the agent look and feel warm for a conversation.

Step 5: Design Considerations

An AI agent voice is a combination of both verbal and para-verbal elements. You’ll need to narrow down to the details of these components to make your voice agent sound more human-like.

1. Verbal elements:

This comprises the specific choice of words your agents use. Besides, it includes how an agent constructs a sentence and the grammatical structure it frames.

Here are a few critical factors you need to take into consideration when building your agent’s verbal elements:

- Diction: Your agent must be able to choose words from a variety of formats, according to the scenario and context. The words can be formal, informal or colloquial. Just make sure it does not over-use jargon, which may confuse users.

- Verbal richness: Train your agent to express the same messaging in different words. This naturally makes the user like to converse more with your agent.

- Syntax: Depending on the context, your agent may choose declarative sentences or interrogative sentences. Train the agent to emphasize information, persuade the user or ask questions for understanding the context more clearly, without annoying the user.

- Semantics: With NLP and NLU, your agents understand the meaning or words, and respond with relevant combinations of words and expressions. Make sure the agent uses simpler words so your users can process them easily.

2. Para-verbal elements:

These are cues that convey the emotion for the situation, tone, attitude and communicative intent of the agent. You may need to train the agent with the right cues for right emotions, or context to deliver a human-like experience.

Here are a few recommendations to get it right:

- Pitch: This is the frequency or ‘melodic height’ of a syllable. In general, if the agent’s voice is in low-pitch, users may tie it to competence or trustworthiness. On the other hand, if the agent speaks in high pitch, it may reflect extraversion or nervousness.

- Volume: Similar to pitch, volume also signals emphasis or emotion of an agent. If the volume is low, the agent may sound sad or tender. Whereas, if the volume is high, the agent may sound dominant or happy.

- Speech rate: This is the tempo at which the agent speaks. If the rate is fast, it represents persuasiveness or enthusiasm. If slow, it may sound as if the agent is sad or dominant.

- Pauses: These are the notable moments of silence when the agent speaks. If there are moderate pauses, it shows the agent is in a reflective state or gives users a time to think or process the response. Contrastingly, if there are excessive pauses or if the timing is not right, it may reduce the credibility of the agent.

- Pronunciation: This represents the correct formation of specific words, letters and syllables without colloquial influence. However, if the user configures colloquial pronunciation in the agent settings, your agent must be able to implement it accordingly.

- Articulation: This shows how clear and distinct the agent’s speech is. The agent must articulate a message in a more persuasive or empathetic tone, according to the context of the conversation.

- Timbre: This is the color or quality of the voice tone. For example, your agent may have a specific advertising tone when talking about a product and its features.

- Breathiness: These are audible air puffs in a conversation. If your agent has light breathiness, it sounds warmer, making the conversation more natural.

Step 6: External API Integrations

As you set the voice agent’s functionalities and UI, it leads to the next step – expanding capabilities through third-party extensions, APIs or plugins.

Your agent may primarily take care of conversational functions, but there are some nice-to-have features you can add to it.

Here’s what we recommend:

- Weather: Your agent may answer from your knowledge base. But what if your users want to know what the weather is? Add weather plugins that can tell users the temperature or forecast when asked. Or include them in a conversation to make it more natural and relevant.

- Messaging: Don’t let your users abandon your agent and leave to a messaging app to send out texts. Add integrations and set custom intents that lets agents ask users if it needs to curate a message or send it to someone when the user says a phone number.

- Email: Based on the conversations, you can drive your agent to create an email and send it to the recipient that the user mentions on call.

- Mapping: You can also add custom maps like campus or workplace maps, or customer demographics to visually guide the users for queries based on locations.

So, you could just go on to add as many external plugins and extensions you prefer. Remember, MirrorFly does support the third-party APIs, and is supportive of extending your voice agent’s capabilities.

4. Challenges in Building Custom AI Voice Agents

Building a custom AI Voice Agent is not easy. You’ll come across a range of technical, design and ethical challenges. These challenges if not addressed or resolved might highly impact the development of your AI voice agents and have serious effects on the human-agent interaction.

Although there’s always a way out, here are the detailed challenges in building custom AI voice agents that you must consider in your development process.

Your custom AI agent needs to sound natural. Achieving a human-like agent comes with significant hurdles:

4.1 Voice Creation (TTS)

- Hard to make voices sound truly natural, risk of robotic tone or mispronunciations.

- Recording requires a soundproof environment and a lot of audio samples.

- Advanced, natural-sounding voices need large datasets and costly tools.

- Many services restrict exporting custom voices, limiting flexibility.

4.2 Speech Recognition & Understanding

- AI can mishear or misunderstand what users say.

- Matching user intent is error-prone, especially with evolving NLP tools.

- Integration with APIs takes time, debugging, and expertise.

- Real-time, hands-free interaction is technically complex.

4.3 Design & User Experience

- Legal risks if using copyrighted models or assets.

- Technical issues with 3D avatars (glitches, “uncanny valley” visuals).

- Lip-syncing and natural animations require significant effort.

- Realistic eye contact and body language need specialized coding.

4.4 Technical Infrastructure

- Managing libraries and dependencies often causes setup headaches.

- High-quality graphics can cause performance slowdowns.

- Porting to multiple platforms (mobile, VR, AR) requires extra optimization.

- Hardware limitations slow development.

4.5 Research & Ethical Considerations

- Privacy concerns around “always-on” microphones and voice cloning.

Most testing excludes diverse user groups (children, elderly, non-Western). - Risk of bias (e.g., default female voices, biased datasets).

- Many studies lack standardized measures, making results unreliable.

- Agents operate in siloed ecosystems (Google, Apple, Amazon), limiting cross-platform use.

5. Building a Custom AI Voice Agent with MirrorFly

Creating an AI voice agent comes with many challenges, but with the right platform, you can overcome them and build powerful, scalable, and secure AI-powered systems.

MirrorFly provides everything you need to design, customize, and scale your own white-label AI voice agent with complete control over features, security and infrastructure.

Here are the key reasons why developers and businesses choose MirrorFly:

- 100% customizable: MirrorFly is completely customizable. You get to have your hands on features, security and what not?

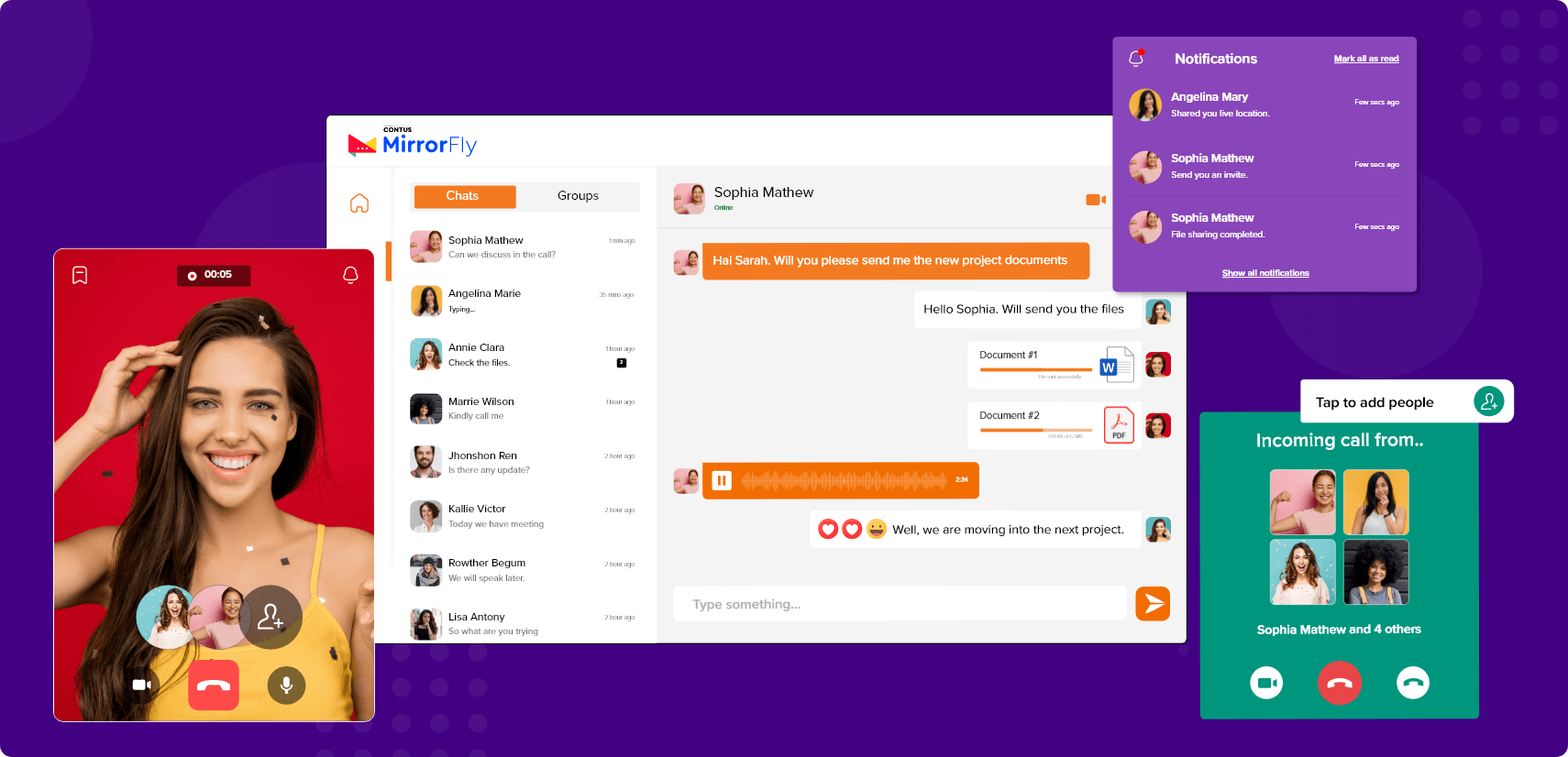

- 1000+ Communication Features: When you build your AI agent, they are empowered with 1000+ real-time features that your users will enjoy.

- 15+ Communication APIs: Integrate voice, chat, and video seamlessly with customizable APIs and drive billions of conversations with your AI agents.

- 12+ AI Agent Solutions: Upgrade your platforms with secure, production-ready conversational AI solutions.

- Custom Security: Don’t worry about the security of agent-user conversations. There’s end-to-end encryption along with compatibility for GDPR, HIPAA and OWASP. Plus, you get to add any layers of privacy and compliances as your business demands.

- Full Data Control: You get complete access to the data. Not even MirrorFly will be able to access your user information shared to the agent during the conversation.

- Flexible Hosting: Deploy your AI agent wherever you want – on-premises, private cloud, your own servers or MirrorFly’s multi-tenant cloud servers.

- White-label Solution: Launch your AI agent under your own brand identity with full white-label options.

- Full Source Code Ownership: Enjoy complete access to the source code, customize almost anything or everything you want.

- SIP/VoIP Solution: Enable reliable, enterprise-grade calling capabilities with built-in SIP and VoIP support.

With MirrorFly, you’re not just building any AI voice agent – you’re building the most powerful and customizable AI voice agent. Want to get started with your AI agent? Book a Free Consultation now!

Build Your Own White-label AI Voice Agent With MirrorFly for your business.

Connect with our specialists and get your custom build + deployment plan. Get started with our solution in the next few minutes!

Contact SalesComplete Ownership

Custom Security

On-Premise Hosting